Adding an External Model

Introduction

nexus offers users the flexibility to tailor their AI experience by connecting various Large Language Models (LLMs) to suit specific needs, whether it’s optimizing cost, enhancing performance, or accessing specialized knowledge. This guide walks you through integrating external LLMs and leveraging nexus’s native configurations to elevate your agent’s capabilities.

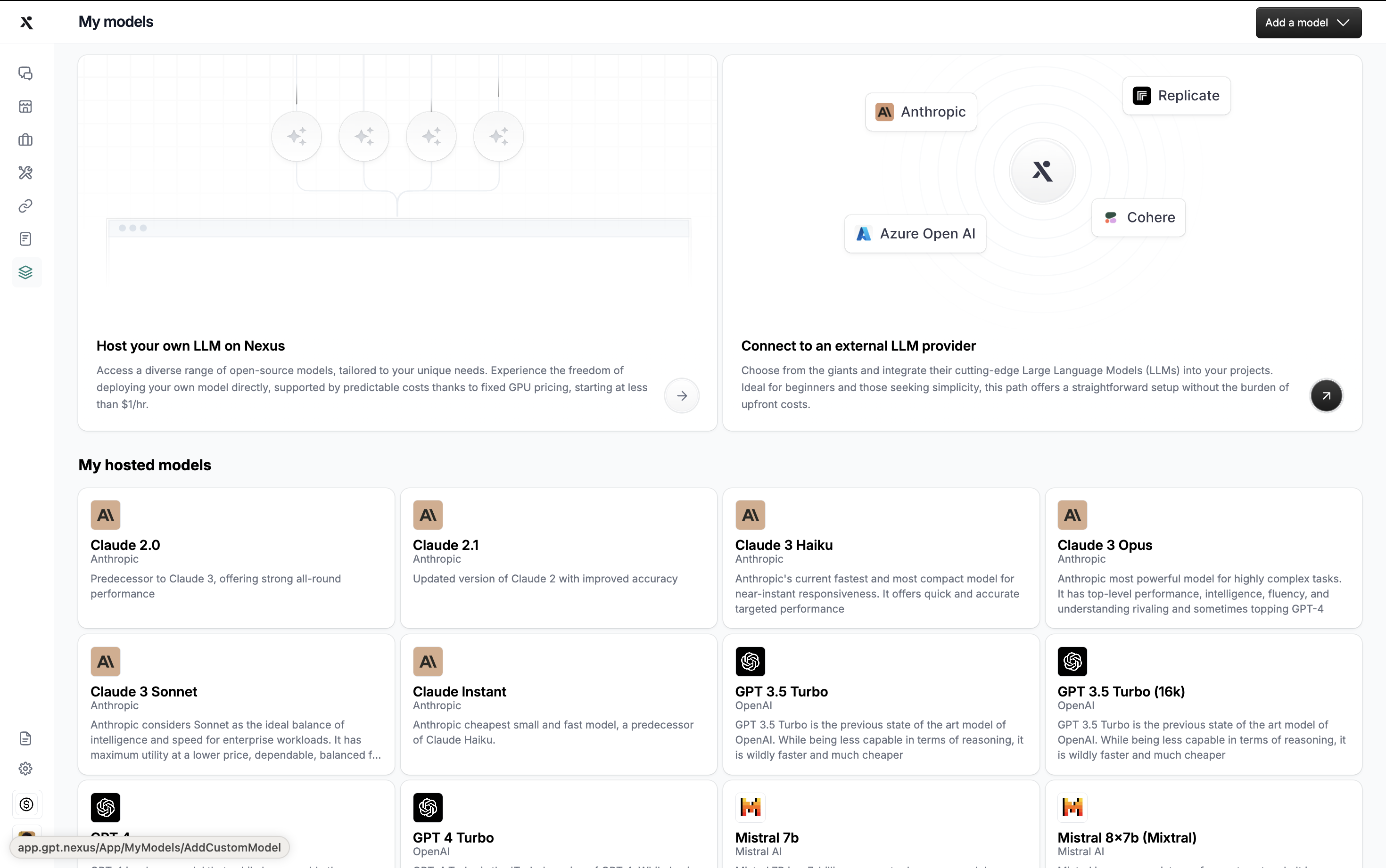

Select an Existing Configuration on Nexus

Beyond external providers, nexus comes equipped with a suite of pre-configured, readily available models. These models are optimized for immediate use, offering both cost efficiency and high-quality outputs.

Select a Model from an External Provider

Why Use External Providers?

Opting for an external LLM provider allows users to control costs more effectively, switching from a flat rate to a pay-per-use model. This approach is advantageous for those who:

- Seek predictability in expenses.

- Have intermittent or variable usage requirements.

- Require specialized models tailored for unique tasks.

Supported External Providers on Nexus

Nexus currently supports an array of providers, ensuring a broad selection of models to fit every requirement:

- Anthropic

- Google AI

- Mistral AI

- Cohere

- Replicate

- Bedrock

- Gradient AI

- HuggingFace

- OpenAI

- Azure OpenAI

- Ollama (for self-hosted models on external servers)

How to Add an External Provider

- Navigate to the Models section and click on "Connect to an external LLM provider."

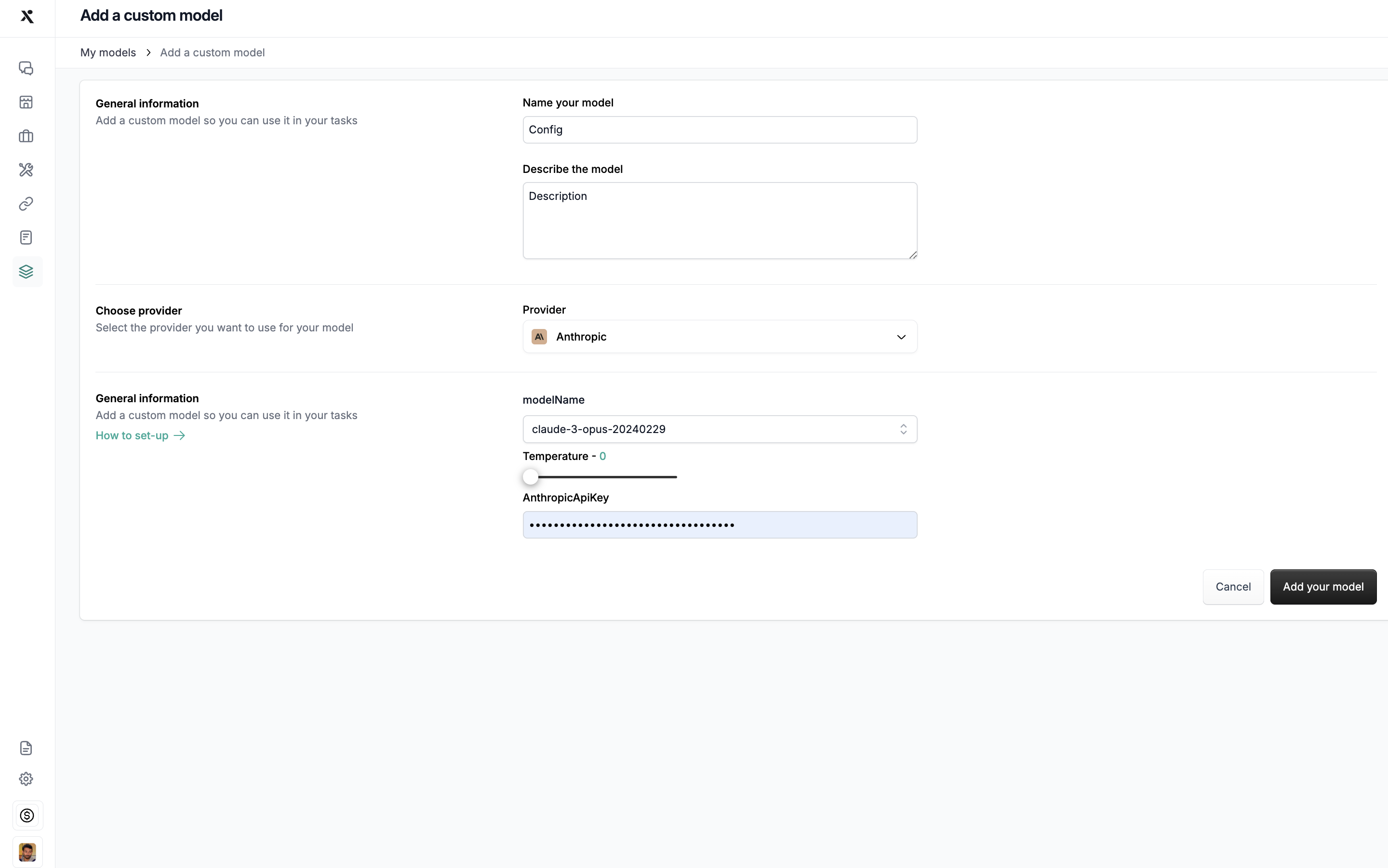

- Enter the model configuration screen, type a name for your configuration, and provide a detailed description.

- Select your desired provider from the supported list.

- Input the API key for the chosen LLM. If you need assistance, click on the "How to setup" guide adjacent to the API key field for detailed instructions.

- Configure your model settings, such as top p and temperature, to customize its performance according to your task requirements.

By completing these steps, you successfully add an LLM from an external provider, ready to be integrated with your tasks and agents within Nexus.

Hosting Your Own LLM on Nexus

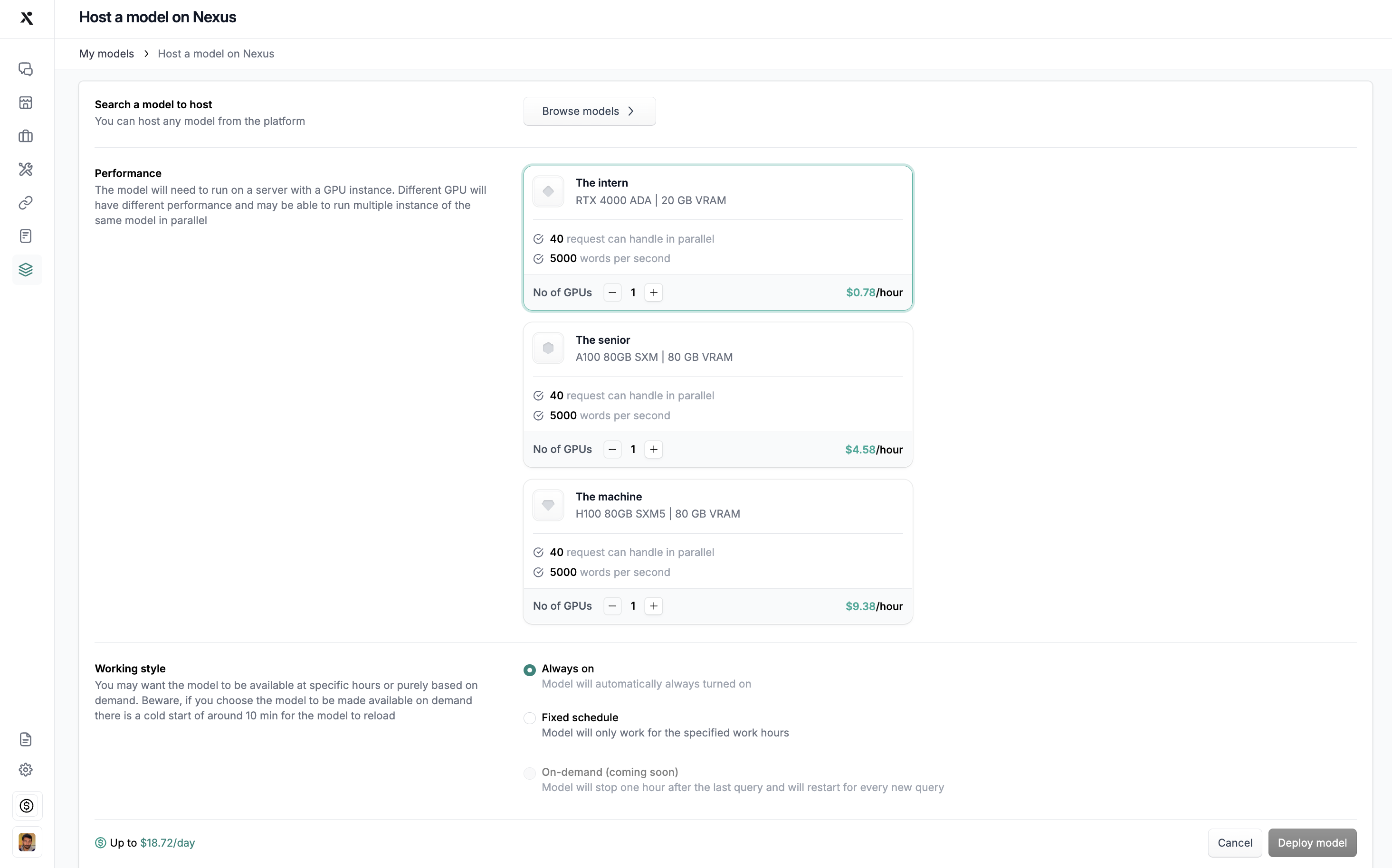

For those seeking bespoke solutions, Nexus also allows the hosting of open-source models, offering a balance between cost, performance, and specific use-case optimization.

Hosting Steps

-

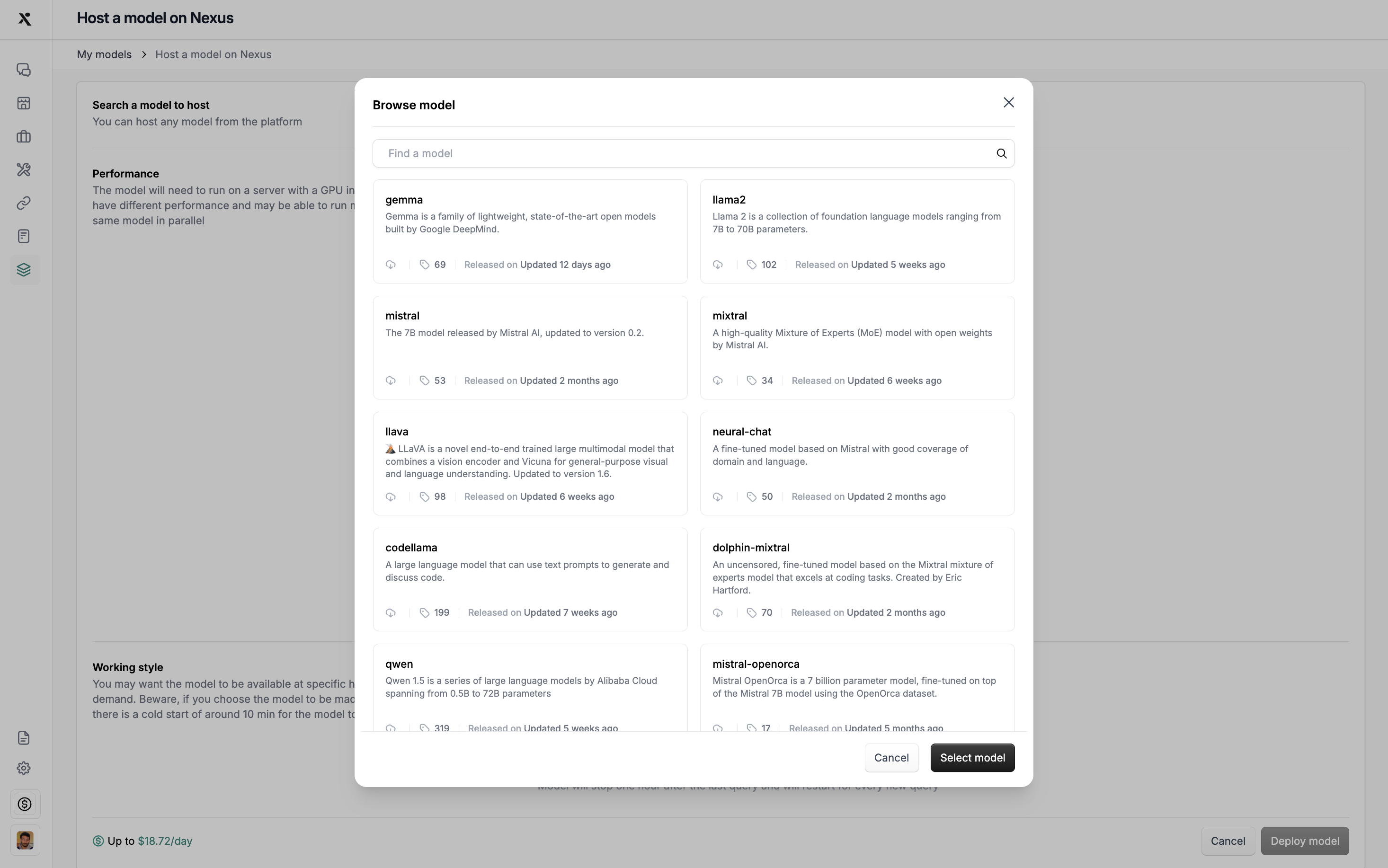

Click "Host your own LLM" from the main model tab.

-

Browse and select the desired open-source model that aligns with your task requirements.

-

Choose a suitable GPU configuration based on your budget and performance needs.

-

Define the operating schedule for your model, ensuring it aligns with your operational hours or demands.

-

Review the daily cost breakdown at the bottom of the configuration page.

Nexus’s model management system is designed to provide flexibility, control, and ease, whether you’re integrating an external provider’s model or leveraging the platform’s extensive model library.