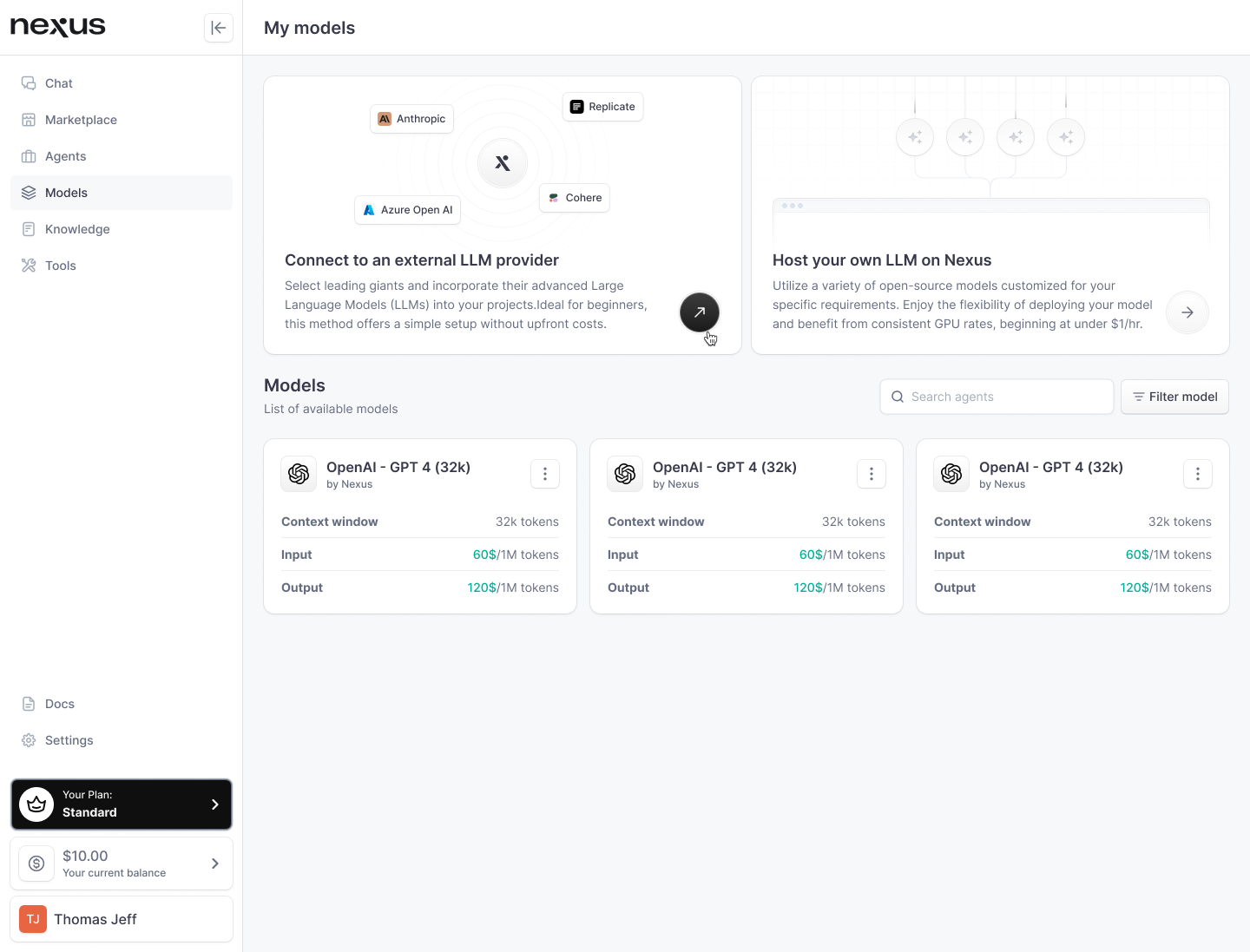

Models

The Model tab on nexus is a specialized hub for managing and customizing Large Language Models (LLMs) to supercharge your AI agents. Before we delve into the intricate processes of linking models to tasks, editing model parameters, hosting models, or adding external models, let's examine the foundational question: Why might a user need different models?

Why a User May Want to Use Different Models

In the realm of artificial intelligence, LLMs like GPT-3 and GPT-4 by OpenAI have revolutionized the way we think about and utilize machine learning. These models are not just monolithic entities; they are malleable, dynamic constructs that can be adapted to suit a wide array of use cases. Each model possesses unique capabilities, cost structures, and configurations, allowing users to make strategic decisions based on their specific needs.

Diverse Capabilities

Different LLMs have varied strengths. For example, some are generalists like GPT-4, which can handle a wide range of topics but may not be the most efficient choice for specialized tasks. On the other hand, certain models are fine-tuned for specific industries or applications, offering precision and expertise in particular domains.

Cost Efficiency

The cost of using LLMs is not a flat rate across the board. Generalist models, especially the larger and more comprehensive ones, often come at a premium. They can be slower and more expensive to operate. For certain tasks, it might be more cost-effective to use smaller or specialized models that provide faster responses at a lower cost.

Model Configuration

Within a single model, various configurations are available, like adjusting the "temperature" and "top_p" settings. These fine-tuning capabilities enable users to shape the generation process, influencing creativity, randomness, and predictability of the output. A lower temperature setting, for instance, would yield more predictable and conservative text, whereas a higher temperature could inspire more creative and diverse content.

How nexus Handles Model Management

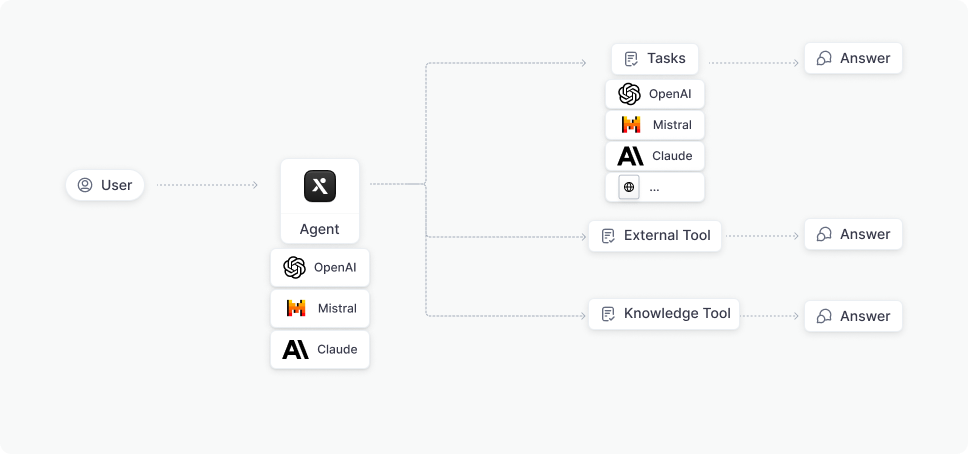

nexus offers a robust framework for LLM management, crucial for powering both tasks and agents.

LLMs within an Agents

An agent’s LLM holds its base prompt and list of skills. It's the brain behind the agent's ability to comprehend and execute when calling an external tool or utilizing a task. Knowledge context added to an agent's prompt will be processed through this LLM, ensuring a seamless integration of information into the conversation.

Currently, only the latest generation commercial models that include function-calling abilities are compatible with agents on nexus. These models—like Mistral, Anthropic, and OpenAI's GPT-4—enable agents to determine and execute the appropriate skills based on user queries.

LLMs within a Tasks

For writing tasks, different LLMs can be harnessed to optimize the generation of content. Linking a particular LLM to a task ensures that the best-suited model is used for the job, which can significantly enhance efficiency and output quality. For instance, linking a finetuned version of GPT-3.5 for email writing tasks can provide better quality results than using a more generalized model like GPT-4 for the same task.

Organization of the Model Section

The Model section on nexus is structured into comprehensive pages, each dedicated to a specific aspect of model management:

-

Adding an External Model: Describes how to incorporate new models to nexus, either from external providers or hosting an open source model directly on nexus to improve the functionalities of tasks.

-

Managing Models: Offers insights into linking a model to a task, updating and tweaking model parameters for customizing response styles